Amazon has applied for a patent for an audio system that detects the accent of a speaker and changes it to the accent of the listener, perhaps helping eliminate communication barriers in many situations and industries. The patent doesn’t mean the company has made it (or necessarily that it will be granted), but there’s also no technical reason why it can’t do so.

The application, spotted by Patent Yogi, describes “techniques for accent translation.” Although couched in the requisite patent-ese, the method is quite clear. After a little translation of my own, here’s what it says:

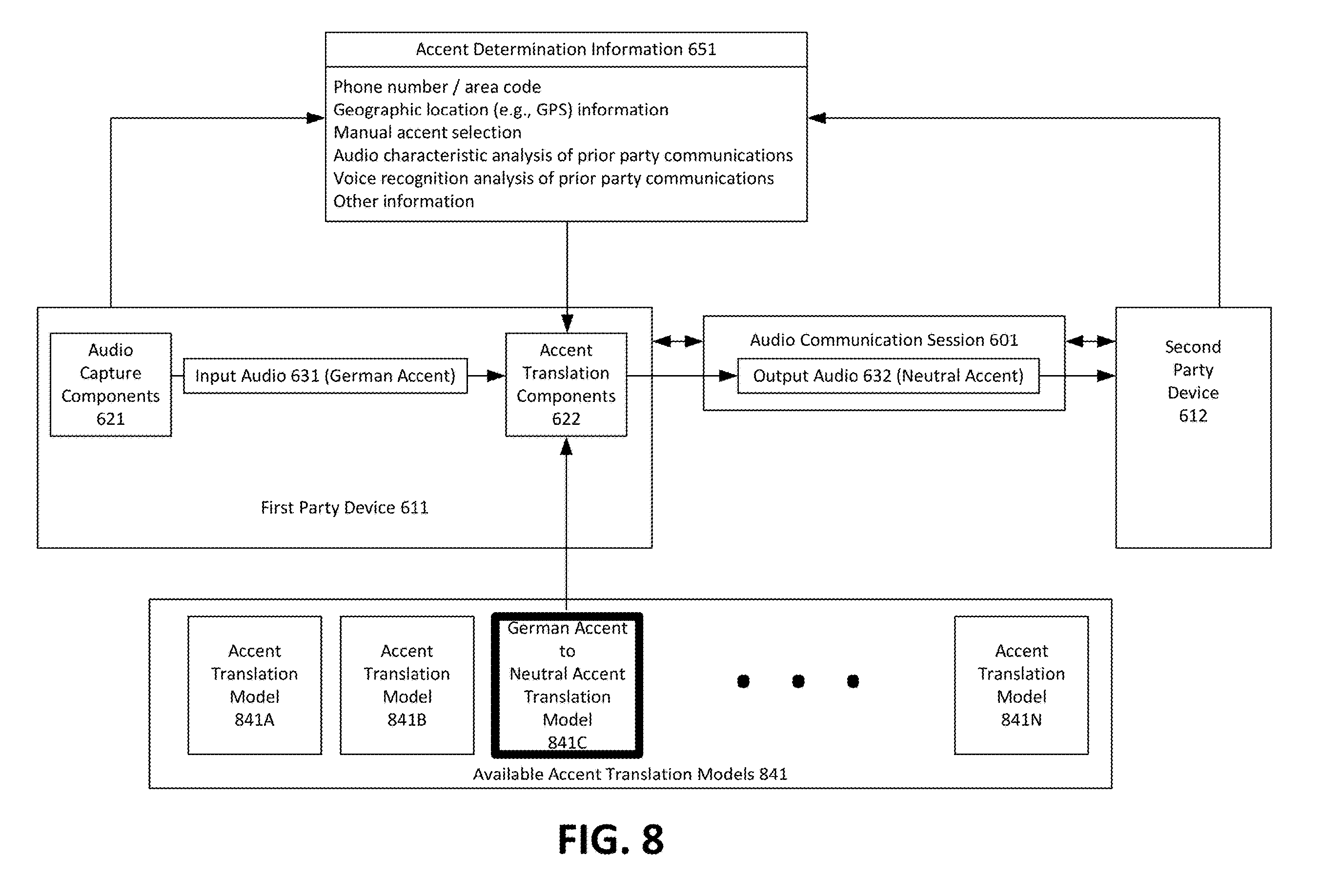

In a two-party conversation, received audio is analyzed to see if it matches with one of a variety of stored accents. If so, the input audio from each party is outputted based on the accent of the other party.

It’s kind of a no-brainer, especially considering all the work that’s being done right now in natural language processing. Accents can be difficult to understand, especially if you haven’t spoken with an individual before, and especially without the critical cues from facial and body movements that make in-person communication so much more effective.

Read more: Lingmo language translator earpiece powered by IBM Watson

The most obvious place for an accent translator to be deployed is in support, where millions of phone calls take place regularly between people in distant countries. It’s the support person’s goal to communicate clearly and avoid adding to the caller’s worries with language barriers. Accent management is a major part of these industries; support personnel are often required to pass language and accent tests in order to advance in the organization for which they work.

A computational accent remover would not just improve their lot, but make them far more effective. Now a person with an Arabic accent can communicate just as well with just about anyone who speaks the same language — no worries if the person on the other end has heavily Austrian, Russian or Korean-accented English; if it’s English, it should work.

There are of course lots of other situations where this could be helpful — while traveling, for instance, or conducting international business. I’m sure you can think of a few situations of your own from the last few months or years where an accent reducer or translator would have been handy.

As for the actual execution of this system, that’s a big unknown. But Amazon has a huge amount of money and engineering talent dedicated to natural language processing, and there’s nothing about this system that strikes me as unrealistic or unattainable with existing technology.

It would be a machine learning model, of course, or rather a set of them, each trained on several hours of speech by people with a specific accent. Good thing Alexa has a worldwide presence! Amazon has an avalanche of audio samples coming in from Echoes and other devices all over the place, so many accents are likely already accounted for in their library. From there it’s just a matter of soliciting voice recordings from any group that’s underrepresented in that data set.

Research along these lines has certainly been done already, but Amazon seems to have the jump on others on the creation of a specific system for using that knowledge in product form.

Notably the patent allows for a bit of cheating on the system’s part: it doesn’t have to scramble during the first few seconds to identify your accent, but can stack the deck a bit by checking the device’s location, phone number, previous accents encountered on that line or, of course, simply allowing the speaker to pick their accent manually. Of course there will still be a variety within, say, a selected accent of “Pakistani,” but with enough data the system should be able to detect and accommodate those as well.

As always with patents there’s no guarantee this will actually take product form; it could just be research or a “defensive” patent intended to prevent rivals from creating a system like this in the meantime. But in this case I feel confident that there’s a real possibility a product will ship in the next year or so.