Signals in the brain hop from neuron to neuron at a speed of roughly 390 feet per second. Light, on the other hand, travels 186,282 miles in a second. Imagine the possibilities if we were that quick-witted.

Well, computers are getting there.

Researchers from UCLA on Thursday revealed a 3D-printed, optical neural network that allows computers to solve complex mathematical computations at the speed of light.

In other words, we don’t stand a chance.

Hyperbole aside, researchers believe this computing technique could shift the power of machine learning algorithms, the math that underlies many of the artificial intelligence applications in use today, into an entirely new gear.

The Brain, Computerized

Deep learning is one of the fastest-growing corners of artificial intelligence research and implementation. Machine learning is how Facebook recognizes you and your friends; it’s how computers diagnose cancer from images of human tissues; it’s how your credit score is determined. If your job title falls under the title of “paper pusher” AI will probably be putting you out of a job.

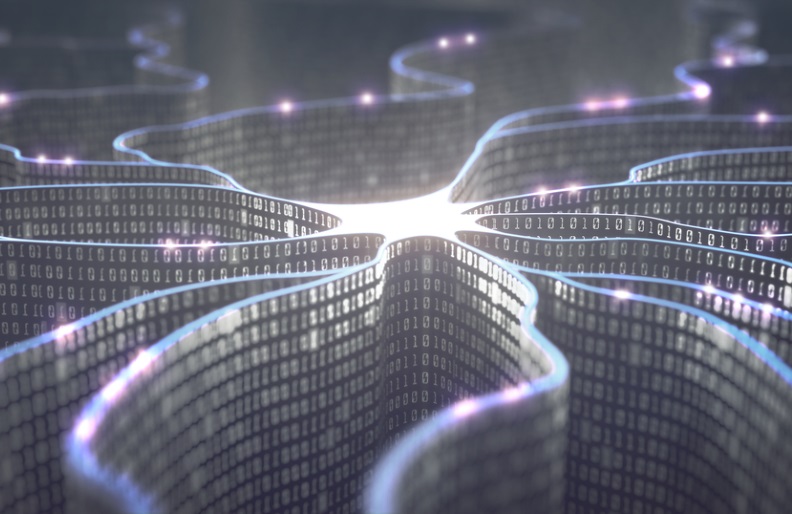

Artificial neural networks garner their brainy comparisons because the mathematical logic of the algorithms are analogous to how scientists think the brain functions. In fleshy brains, we have an entangled mess of neurons that perform one of two functions: They either “fire” or they don’t; they’re binary. But string 100 billion neurons together in an interconnected, infinitely tangled morass of thought spaghetti, and you have yourself a very powerful computer.

Read more: Artificial Intelligence Trends to Watch in 2018

Artificial neural networks work on the same logic, but the “neurons” are just highly simplified mathematical equations — data is fed in, calculated and a solution is spit out. Artificial neural networks contain thousands upon thousands of these mathematical neurons, and they’re arranged in layers. When a neuron performs a calculation, it passes the solution to a neuron in the next layer, where that neuron performs a calculation and passes on the solution in turn.

Researchers train artificial neural networks by feeding them a huge set of solutions to a specific task, say, identifying pictures of trees. By showing the neural network images of trees — the solution — the algorithm “learns” what a tree is. As it goes, the algorithm automatically adjusts the math that occurs at each neuron until the output matches the training set solutions. When a computer identifies an object, it’s simply spitting out a mathematical solution that’s presented as a probability (i.e. there’s a 95 percent chance that object is a tree).

The AI Engines

You need an incredible amount of computing power to make an artificial neural network worth all the trouble. And right now, the computing equivalent of a V8 engine is the graphics processing unit, or GPU. These little circuit board workhorses make video games look beautiful, as they accelerate the rapid fire mathematical calculations required to render images smoothly. If you string chains of these GPUs together you can multiply their power and mine cryptocurrencies or run artificial intelligence programs. It’s no wonder there’s currently a shortage of these things.

But, if you think about it, even the finest GPUs on the market are still manufactured with silicon and copper. Information travels as electrical impulses along intricate circuit board highways. But researchers led by UCLA’s Xing Lin 3D-printed a multi-layered neural network that relays information via pulses of light. And sending information via light, rather than via electric pulses along metallic roadways, is like the difference between driving a car or flying a jet to your destination.

Now, researchers didn’t entirely replace the GPU. Their optical neural network only performed the recognition task — the entire training step was performed via computer. In other words, it’s not an entire system, but it’s a start.

To demonstrate their device’s performance, they tuned their algorithm to identify handwritten numbers by feeding it 55,000 images of the numbers zero to nine. Then, they tested the optical neural network on 10,000 new images. It correctly identified the images with 91.75 percent accuracy, performing the calculations at the speed of light.

How It Works

Rather than adjusting the math at each neuron, researchers say the optical network tunes its neurons by changing the phase and amplitude of light at each neuron. And rather than having a 1 or 0 as the solution at a neuron, each optical neuron either transmits or reflects an incoming light to the next layer. Researchers published their findings Thursday in the journal Science.

The researchers have taken AI hardware in an interesting new direction, because they say it’s possible to pair optical neural networks with computers so they work in parallel and share the workload. Researchers believe these components, dubbed diffractive deep neural networks (D2NNs), could be easily scaled up using advanced 3D-printing methods to add additional layers and neurons. Another plus: A D2NN is very power efficient, and that’s big because current GPUs consume an awful lot of electricity and generate quite a bit of heat.

Merging GPU and optical D2NNs could be a faster, more energy efficient engine for AI applications of the future — like, say, identifying Russian propaganda in your newsfeed.